At the end of July the Commission published a long awaited “targeted consultation addressed to the participants to the stakeholder dialogue on Article 17 of the CDSM Directive“. With this consultation the Commission makes good on its (pre-covid) promise to “share initial views on the content of the Article 17 guidance” with the participants of the stakeholder dialogue. Nestled in-between 18 questions, the consultation document provides a detailed outline of what the Commission’s guidance could look like once it is finalised.

While we have been rather sceptical after the end of the six meetings of the stakeholder dialogue meetings, we are pleased to see that the initial views shared by the Commission express a genuine attempt to find a balance between the protection of user rights and the interests of creators and other rightholders, which reflects the complex balance of the provisions introduced by Article 17 after a long legislative fight.

In the remainder of this post we will take a first, high level, look at the Commission’s proposal for the Article 17 guidance, what it would mean for national implementations and how it would affect user rights.

Two welcome clarifications

With the consultation document the Commission takes a clear position on two issues that were central to the discussions in the stakeholder dialogue and that have important implications for national implementation of Article 17.

The first one concerns the nature of the right at the core of Article 17. Is Article 17 a mere clarification of the existing right of communication to the public, as rightholders have argued, or is it a special or sui generis right, as academics and civil society groups have argued? In the consultation document the Commission makes it clear that it considers Article 17 to be a special right (“lex specialis”) to the right of communication to the public, as defined in Article 3 of the 2001 InfoSoc Directive, and the limited liability regime for hosting providers of the E-commerce Directive.Â

What sounds like a fairly technical discussion has wide ranging consequences for Member States implementing the Directive. As explained by João Quintais and Martin Husovec, now that it is clear that Article 17 is not a mere clarification of existing law, Member States have considerably more freedom in deciding how online platforms can obtain authorisation for making available the works uploaded by their users. This should mean that they are not constrained by the InfoSoc Directive. Therefore, mechanisms like the remunerated “de-minimis” exception proposed by the German Ministry of Justice that would legalise the use of short snippets of existing works are permitted and covered by the concept of “authorisation” introduced by Article 17.

The second clarification contained in the consultation document deals with the very core of the discussion in the stakeholder dialogue. During the stakeholder dialogue, many rightholders had argued that the user rights safeguards contained in Article 17(7) (that measures to prevent the availability of copyrighted works “shall not result in the prevention of the availability of works or other subject matter uploaded by users, which do not infringe copyright”) should not have any direct consequences as the complaint and redress mechanism introduced in Article 17(9) would provide sufficient protection of user rights. In other words, right holders have argued that, even though no-one disputes that upload filters cannot recognise user rights and would structurally block legitimate uploads, this would be fine because users have the right to complain after the fact. In the consultation document the Commission does not mince words and makes it clear that this interpretation is wrong:

The objective should be to ensure that legitimate content is not blocked when technologies are applied by online content-sharing service providers […]

Therefore the guidance would take as a premise that it is not enough for the transposition and application of Article 17 (7) to only restore legitimate content ex post, once it has been blocked. When service providers apply automated content recognition technologies under Article 17(4) [..] legitimate uses should also be considered at the upload of content.

This clarification reaffirms the core of the balance struck by Article 17: Upload filters can only be used as long as their use does not lead to the removal of legitimate content. In their contributions to the stakeholder dialogue many rightholders have sought to negate this principle and the Commission should be complimented for defending the balance achieved through the legislative process. This is even more important since this means that the Commission is also taking a clear stance against the incomplete implementation proposals currently being discussed in the French and Dutch parliaments that, in line with the position brought forward by rightholders, ignore the user rights safeguards contained in Article 17(7).

A mechanism for protecting users’ rights

Now that the Commission has made it clear that any measures introduced to comply with Article 17(4) must also comply with the safeguards established in 17(7), how does it envisage this to work?

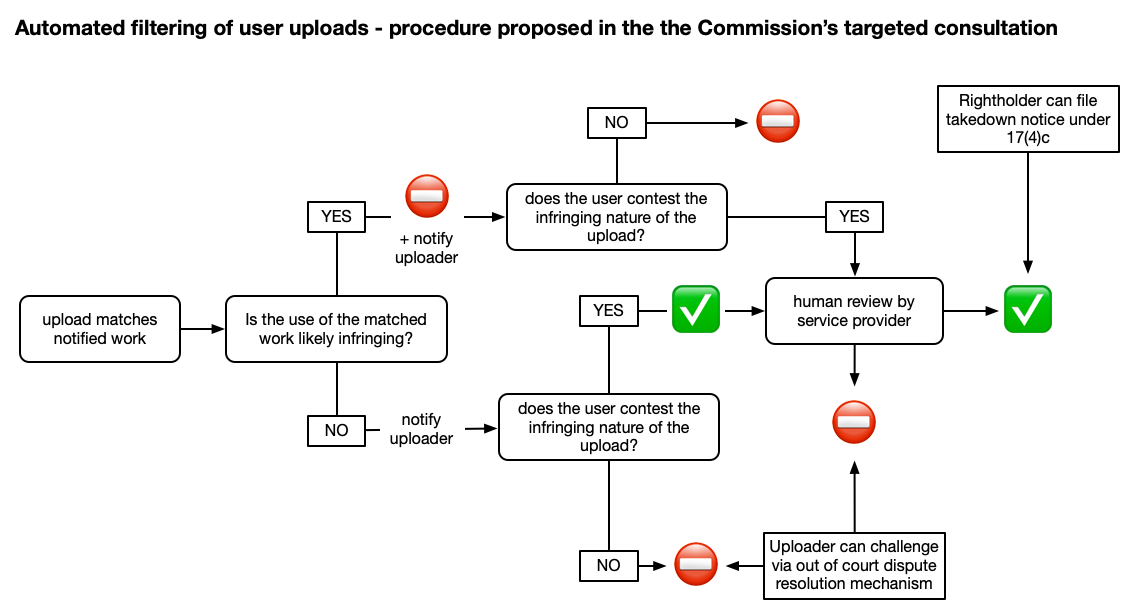

In section IV of the consultation document the Commission is outlining a mechanism “for the practical application of Article 17(4) in compliance with Article 17(7)”. This mechanism is structurally similar to a proposal made last October by a large group of leading copyright scholars and to the mechanism we had proposed during the final meeting of the stakeholder dialogue in February of last year. At the core of the mechanism proposed by the Commission is a distinction between “likely infringing” and “likely legitimate” uploads:

… automated blocking of content identified by the rightholders should be limited to likely infringing uploads, whereas content, which is likely to be legitimate, should not be subjected to automated blocking and should be available.

The mechanism outlined by the Commission would allow that “likely infringing content” could be automatically blocked (with the ability of users to challenge the blocking afterwards). In case of uploads including works that rightholders have requested to be blocked and for which platforms are unable “to determine on a reasonable basis whether [the] upload is likely to be infringing”, platforms would have to notify the uploaders and give them the possibility to assert their rights and overrule the automated filters. In this case the upload stays online until the disputed upload has been reviewed by the platform. If after requesting information from the rightholders and after human review the platform comes to the conclusion that the upload is likely infringing, then it would still be removed. If, on the other hand, the platform comes to the conclusion that it is likely to be legitimate, then it will stay up (both rightholders and users keep the ability to further contest the outcome of the review by the platforms).

This mechanism correctly acknowledges that “content recognition technology cannot assess whether the uploaded content is infringing or covered by a legitimate use” and gives users the ability to override some decisions made by upload filters. In doing so the Commission’s proposal hinges on the differentiation between “likely infringing” and likely “legitimate” uses. Unfortunately, the consultation document fails to further specify under what conditions uploads should be considered to be “likely infringing” (both our proposal and the academic statement proposed that automatic blocking would only be acceptable in cases where infringement is “obvious”). The consultation documents provide the following three illustrative examples:

the upload of a video of 30 minutes, where 29 minutes are an exact match to a reference file provided by a rightholder, could likely be considered an infringing one, unless it is in the public domain or the use has been authorised. On the other hand, a user generated video composed by very short extracts, such as one or two minutes of different scenes from third party films, accompanied by additional content such as comments added by the user for the purpose of reviewing these scenes could be more likely to be legitimate because potentially covered by an exception such as the quotation exception.Similarly still images uploaded by users which match only partially the fingerprints of a professional picture could be legitimate uploads under the parody exception, as they could be ‘memes’, i.e. new images created by users by adding elements to an original picture to create a humoristic or parodic effect.

Although these examples are well chosen, three examples are far from enough to guarantee that the mechanism outlined by the Commission will provide sufficient protection from over-blocking.

This is made even worse by the fact that the Commission goes on to suggest that “the distinction between likely infringing and likely legitimate uploads could be carried out by service providers in cooperation with rightholders based on a number of technical characteristics of the upload, as appropriate”. From our perspective it is clear that unless user representatives also have a role in determining the technical parameters, the whole mechanism will likely be meaningless.

But in principle the approach outlined in the Commission’s consultation seems suitable to address the inherent tension between the inevitable use of automated content recognition technology to comply with the obligations created by Article 17(4) and the obligation to ensure that legitimate uses are not affected. It is a clear signal to Member States that in implementing Article 17 they need to protect user rights by legislative design and not as a mere after-thought.

And a few shortcomings

Apart from the lack of definition of “likely infringing” the consultation document contains a number of additional weak spots that we will address as part of our response to the consultation. While the mechanism outlined in the consultation document can safeguard uses that are legitimate as the result of falling within the scope of exceptions and limitations, it includes no safeguards to address other structural failures of automated content recognition systems. This includes the wrongful blocking of works that are openly licensed or in the public domain. Preventing the blocking of such works can best be prevented by giving users the ability to mark (or in the terms of the German proposal “pre-flag”) them as being legitimate from the start.

Another aspect where the Commission’s proposed guidance needs to be strengthened are sanctions for false copyright claims by rightholders. Merely stating that “Member States should be free to define sanctions” seems insufficient to prevent Article 17 from structurally being abused by parties making wrongful claims of ownership.

Similarly, while it is welcome that the consultation document highlights the benefits of transparency for users, it does not go further than considering the possibility “to encourage Member States to put in place an exchange of information on authorisations between rightholders, users and service providers” and to “recommend that Member States encourage online content- sharing service providers to publicly report on the functioning of their practices with regard to Article 17(4).”

The consultation remains open for reactions (in principle limited to organisations participating in the stakeholder dialogue) until the 12th of September. Together with other user and digital rights organisations we will continue to stress the importance of including substantial protections for user rights in the Commission’s guidance. The first views presented by the Commission as part of the consultation are an important step into this direction. They clearly show that the Commission intends to use its ability to issue guidance as a means to uphold the legislative balance of Article 17. In light of this, Member States (especially those who in their desire to implement quickly have abandoned this balance) would be well advised to wait for the final version of the Commission’s guidance before adopting their implementations.